We must not build AI to replace humans.

Humanity is on the brink of developing artificial general intelligence that exceeds our own. It's time to close the gates on AGI and superintelligence... before we lose control of our future.

An essay by Anthony Aguirre

Interactive Summary

The main points in 5 minutes.

Video

Watch the video explainer.

Keep The Future Human

Read the full essay online.

Creative Contest

$100,000+ in prizes for creative digital media.

Scroll to read more

Keep the Future Human explains how unchecked development of smarter-than-human, autonomous, general-purpose AI systems will almost inevitably lead to human replacement. But it doesn't have to.

Learn how we can keep the future human and experience the extraordinary benefits of Tool AI...

Receive updates from us

Learn how you can get involved in preserving a human future.

The benefits of AI without unacceptable risks

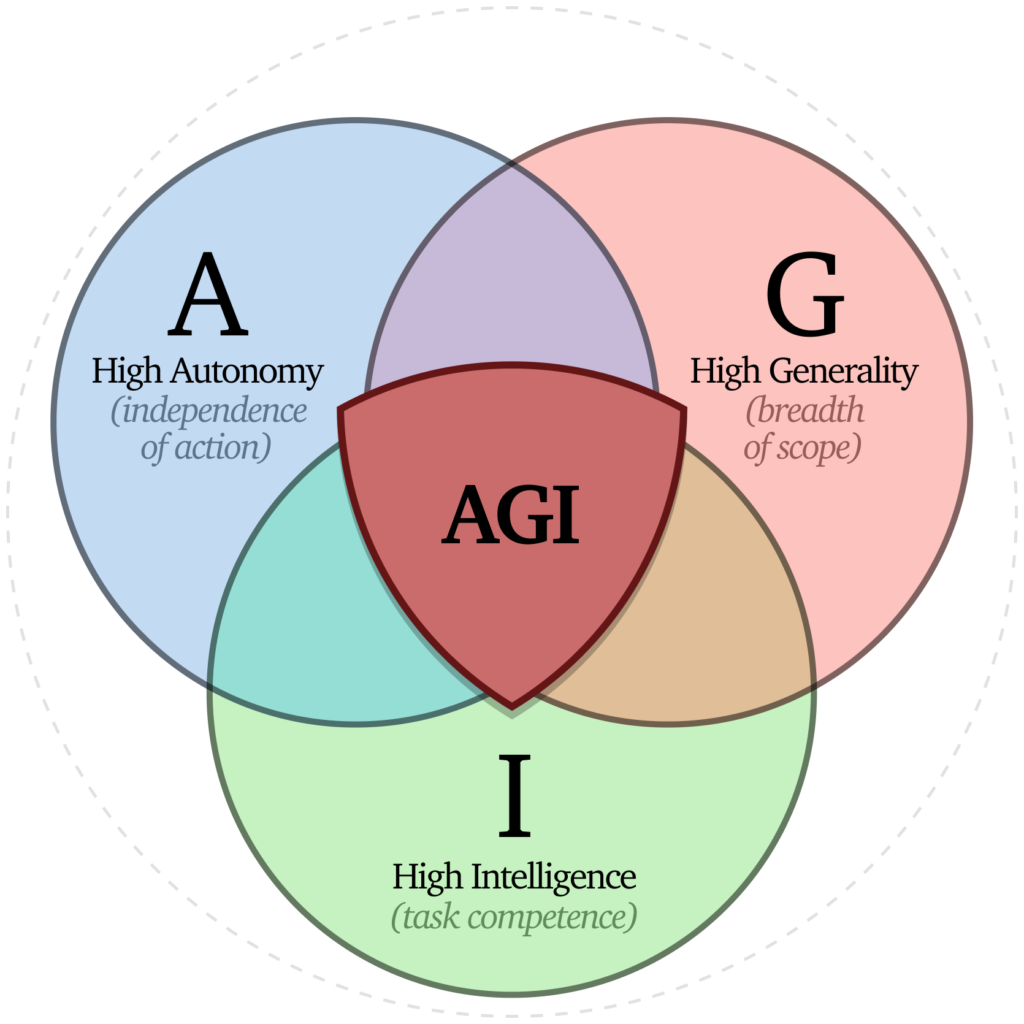

We cannot prevent AI systems from being built and used – and we shouldn't, because they can offer tremendous benefit. But systems that combine high levels of intelligence, generality, and autonomy are highly risky, and must not be built until we can guarantee they are secure.

Keep The Future Human explains why AGI poses unique risks, and defines the type of systems we should build to enhance our society, economy and wellbeing – 'Tool AI'.

Chapter 4: What are AGI and superintelligence?Four essential measures for a human future

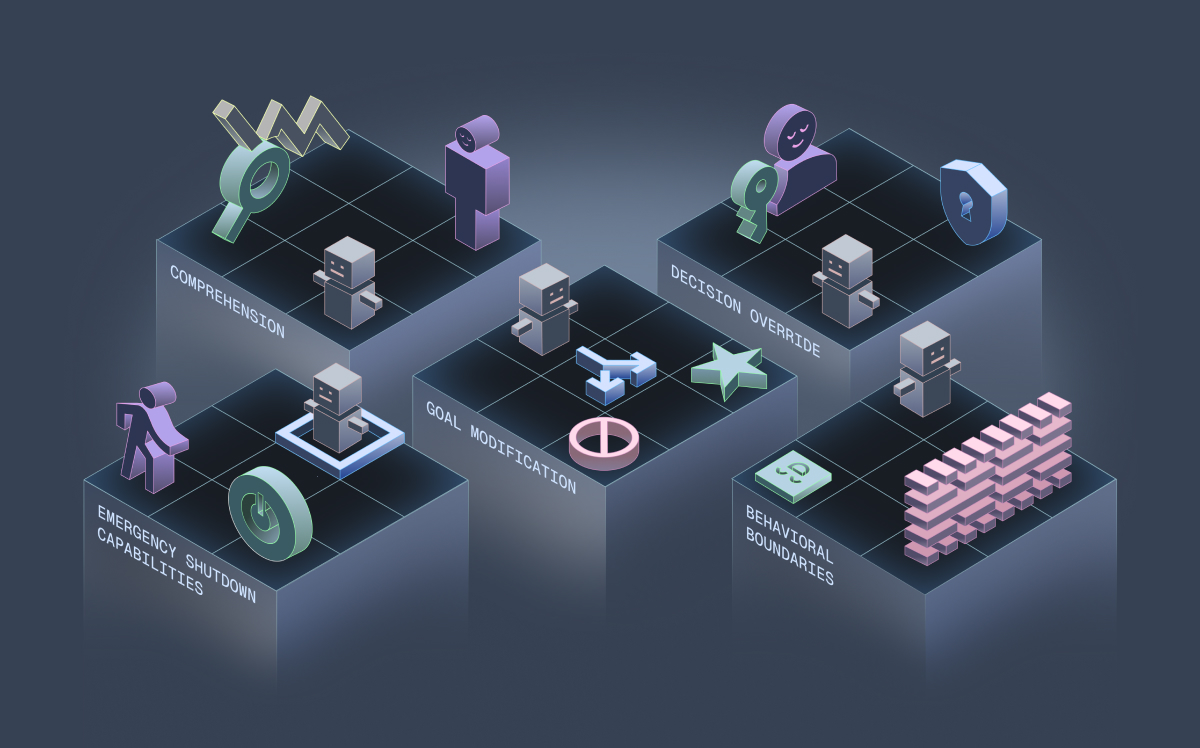

Keep The Future Human proposes four essential, practical measures to prevent uncontrolled AGI and superintelligence from being built, all politically feasible and possible with today's technology – but only if we act decisively today.

Compute oversight

Standardized tracking and verification of AI computational power usage

Compute caps

Hard limits on computational power for AI systems, enforced through law and hardware

Enhanced liability

Strict legal responsibility for developers of highly autonomous, general, and capable AI

Tiered safety and security standards

Comprehensive requirements that scale with system capability and risk

What others had to say about the essay:

Brad Carson, Former Congressman and President of Americans for Responsible Innovation

"Keep the Future Human outlines a compelling and vital path forward on Al that drives innovation and scientific progress while ensuring that Al does not threaten national security and public safety. An essential guide to a better future."

Stuart Russell OBE, Distinguished Professor of Computer Science, UC Berkeley; Director, Center for Human-Compatible AI

"Keep the Future Human makes three very simple points. First, creating artificial general intelligence is suicidal. Second, we're doing it because we think it will yield enormous benefits. Third, we can get those enormous benefits without creating artificial general intelligence. So let's not. This essay is critical and urgent reading."

Jaron Lanier, Computer Scientist, Composer, Author, and Pioneer of Virtual Reality

"This is the most actionable approach to AI. If you care about people, read it."

About the author

Anthony Aguirre is a Co-Founder and the Executive Director of the Future of Life Institute. He is the Faggin Presidential Professor for the Physics of Information at UC Santa Cruz and has done research on an array of topics in theoretical cosmology, gravitation, statistical mechanics, and other fields of physics. Anthony is the author of Cosmological Koans: A Journey to the Heart of Physical Reality, and has appeared in numerous science documentaries. He is a creator of the science and technology prediction platform Metaculus.com, and is a founder of the Foundational Questions Institute.

New

Also from the author...

Control Inversion

Why the superintelligent AI agents we are racing to create would absorb power, not grant it

Read the essay